Rethinking Reasoning: Energy-Based Transformers Optimization

Discover how energy-based transformers (EBTs) redefine reasoning as an optimization process, enabling adaptive problem-solving through iterative evaluation and refinement. Learn more about this new paradigm in AI.

TRENDS

7/13/20252 min read

🧠 Thinking in Loops

Researchers from the University of Illinois Urbana–Champaign and the University of Virginia propose Energy-Based Transformers (EBTs): a novel model design that frames “thinking” as an optimization process. Instead of simply generating a final output, an EBT uses an internal energy function to:

🧠 Evaluate how well a candidate's response matches the prompt (lower energy = better fit).

Optimize it via iterative refinement—thus “thinking longer” for tougher questions.

This diverges from conventional LLMs, which rely on a single pass plus learned patterns to output responses.

⚙️ How Energy-Based Transformers Work

Learn the energy function: The Model is trained to assign lower energy to good input-output pairs.

Generate and refine: Start with a random candidate, then iteratively refine to minimize energy, effectively “thinking” through alternatives.

Unified model: Generator and verifier reside in the same architecture—no need for external ranking or agents.

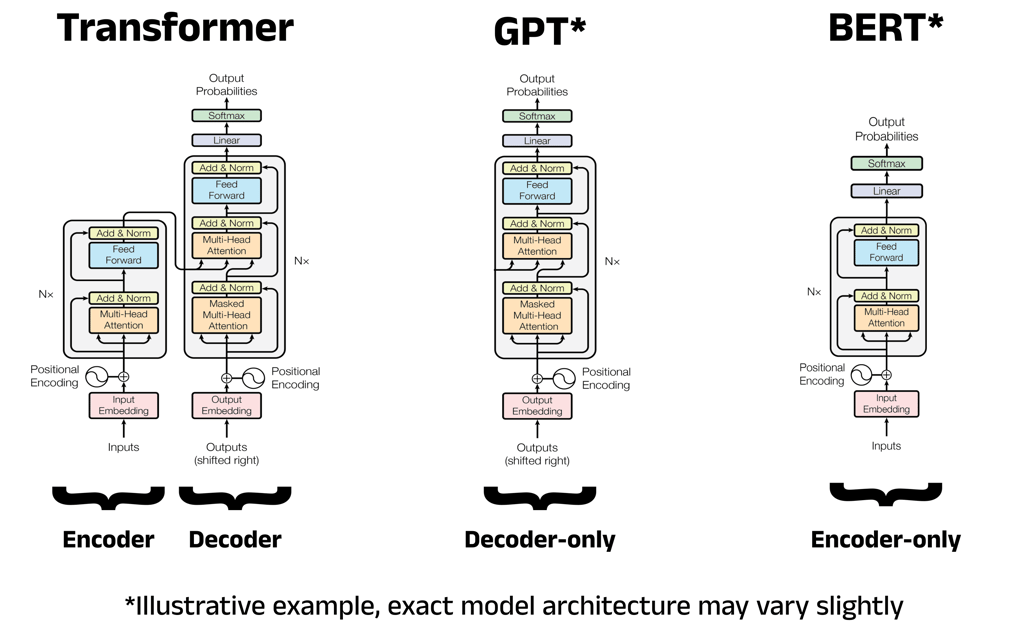

There are two main EBT variants:

Decoder-only (GPT-style)

Bidirectional (BERT-style)

🛠️ Real-World Potential

EBTs were benchmarked against other transformers:

Learned scalability: efficiently learn from data

Thinking scalability: performance improves with more inference compute

For enterprise applications, this means:

Cost-effective high-level reasoning

Adaptability across domains

Stronger performance on safety-critical tasks and limited-data scenarios

🧭 Broader Context & Related Trends

OpenAI’s “o1” uses inference-time scaling & chain-of-thought reasoning—another example of reasoning-focused model designs

Mesa-optimization and neuro-symbolic AI represent related research exploring optimization-like reasoning and hybrid symbolic approaches

🔮 Why It’s a Big Deal

New axis of scaling: Adds “thinking depth” as a new leverage point—not just model size or training data.

Unified generator-verifier: Simplifies architectures and boosts reliability.

Beyond benchmarks: Better suited for robust AI in real-world tasks where data is unpredictable or limited.

🧩 Why This Matters: Better “System 2” Thinking

System 1 vs. System 2: Psychological distinction between fast intuition (System 1) and slow analytical thought (System 2). Current LLMs excel at System 1 tasks—but struggle with deep reasoning. EBTs aim to fill this gap.

Adaptive Reasoning: Using inference-time scaling, EBTs can allocate more compute to complex queries, equivalent to “thinking harder.”

Better Generalization: The model can verify its outputs, enabling it to more reliably handle novel, out-of-distribution problems.